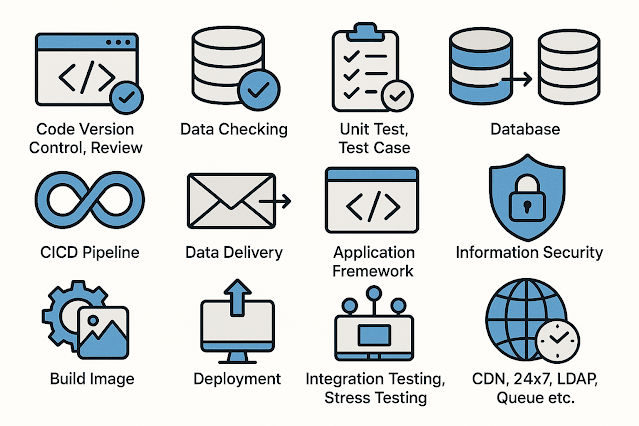

有鑑於先前在LinkedIn分享「害怕資訊技術」的職場現象,決定寫下這一篇淺顯易懂文章(希望真的是淺顯易懂!)來向大家科普一下。我會以「資料處理」說明6項常見的資訊基礎建設,以及以「應用程式系統」說明另外6項,總共12項資訊基礎及其任務角色,本人並非完全熟練這12項要點的實作,但隨著工作任務的推進,與經驗、技術的累積,實作能力也將持續進化,歡迎多多交流。

As the viewpoint "fear of information

technology" shared on LinkedIn before, I decided to write this

easy-to-understand article (I hope it's really easy to understand!). About the

category "data processing", I'll show 6 common information

infrastructures; about the category "application system", I'll show another

6, a total of 12 and their task roles. I'm not fully proficient in these 12 key

points. However, as work tasks executed and experience gained, my abilities

would be better. Any discussion and viewpoints are welcomed.

資料處理Data Processing

#infra #coding #Python #data_engineering #DataOps

「資料處理」方面需要注意以下6項要點:

There're 6 key points to note when it comes to data processing:

1.

程式碼版本控制與檢視

Code Version Control and Review:

無論是資料產品還是應用程式產品,以Git實作版本控制,都是必要的基礎建設,建議區分Git使用者權限,並將重要的分支設定好保護機制(Branch Protect),每一次釋出改版的程式碼,都必須提出Merge

Request,由管理者檢視程式碼測試結果後,再做Merge的動作。

以撰寫Python程式碼為例,建議開發者以Pylint做靜態分析,且有寫設計文件的習慣,以利他人閱讀程式碼前可快速理解程式的設計概況。

Using Git to implement version control is a necessary infrastructure. It's

recommended to distinguish Git user permissions and set up branch protection. A

merge request must be submitted whenever code is released, and administrator should

review the code test results before performing merge actions.

Taking Python for example, developers are advised to use Pylint for static

analysis, and have the habit of writing design documents, so that others could

quickly understand the overview of the program.

2.

資料檢查機制

Data Checking:

資料產品品質的良窳,可能會受到「原始資料來源」的狀況,以及「資料程式的處理過程」所影響,這裡我們針對「原始資料來源」撰寫資料檢查程式來偵測有問題的資料。

如果是常見、可透過程式邏輯處理的資料,可以透過程式做資料清理的動作,如果是非預期的異常情形,則建議設置通知機制,一旦原始資料發生異常,需要以人工來判讀、處理,若研判可以透過程式處理,則需要持續修改程式,盡可能以程式做資料清理的動作,減少人工的介入。

資料品管工程師需要與資料維運工程師合作,將上述需要以人工介入的動作納入維運流程,以確保原始資料來源無論有無發生異常,皆可順暢執行維運工作。

The quality of data products may be affected by the status of the "data

source" and the "data processing program". Therefore we should

write data checking programs for the "data source" to detect possible

bug issues.

Data should be processed through coding programs if possible. In case there's

any abnormal issue, it's recommended to set up notification mechanisms. Once

the data source is abnormal, it needs to be interpreted and processed manually.

If the abnormal data issue could be handled programmatically, then the program

needs to be modified to reduce afterward manual intervention.

Data quality assurance engineers need to work with DataOps engineers to

incorporate these mentioned actions, including the possible manual intervention,

into the maintenance process.

3.

單元測試與測試案例機制

Unit Testing and Test Case:

資料產品品質的良窳,可能會受到「原始資料來源」的狀況,以及「資料程式的處理過程」所影響,這裡我們針對「資料程式的處理過程」撰寫單元測試或測試案例,避免資料實際處理過程,與原先開發時的構想,發生不一致的狀況,又或者是因為程式改版、套件升級的情形,發生資料處理異常的狀況。

以撰寫Python程式碼為例,發布到正式環境之前,必須以unittest或Pytest執行測試,實務上來說很難達成100%測試涵蓋度,畢竟開發時間與測試程式碼的維護也是需要考慮的,但建議至少要將核心計算功能、資料處理功能納入測試。

The quality of data products may be affected by the status of the "data

source" and the "data processing program". Therefore we should

also write unit tests or test cases for possible bug issues from "data

processing programs". The inconsistency may be derived from

misunderstanding of the original data processing concepts, program revisions,

or coding package upgrades.

For example, when writing Python scripts, we had better run tests with unittest

or Pytest before they're released to production environment. In practice, it's

difficult to achieve 100% test coverage, because development time and

maintenance costs should also be considered. It's recommended that, at least,

the core computing functions or processing functions should be included.

4.

資料庫的選擇與管理

Database Selection and Management:

資料的收集,與資料的產出結果,皆需要資料庫做為儲存媒介,除了常見的關聯式資料庫,也可能必須因應資料的特性與型態,選擇NoSQL資料庫做為儲存媒介。

既然有資料庫,就需要資料庫管理員做帳號管理、權限控制等規劃與實作,也需要因應資料的需求,做資料表設計與關聯等操作,最後再依照資料處理流程與管理機制,彈性調整資料庫的設定以因應自動化的需求。

The collection of data and the output of data both require a database as a

storage medium. In addition to the common relational database, it may be

necessary to choose a NoSQL database as a storage medium depending on the

characteristics of the data.

Database administrator needs to do account management and permission control.

It's also necessary to design table relations according to data needs. Finally,

according to data processing steps and management mechanism, database

administrator should flexibly adjust database settings to suit automation

needs.

5.

CICD Pipeline設計

CICD Pipeline Design:

不知道大家有沒有和我一樣,初次認識Git時有相同的問題:如果Git只是單純的程式碼版本控制工具,那我怎麼會有動力要將程式碼推上Git呢?又如何決定什麼時候要推上Git呢?

原來Git之後通常會由DevOps工程師設置CICD

Pipeline做程式自動建置、自動部署的動作,這些自動執行的管線設計所使用的程式碼,都是源自於Git

Repository,也就是說如果要讓我們辛苦撰寫的程式碼可以在正式環境執行,就必須推上Git。

此外這些程式碼也必須經過前述靜態分析、資料檢查、測試案例等重重把關,我們才能夠安心將程式與程式產出的資料,在正式環境發布,這些重重把關機制的實作,就必須仰賴CICD Pipeline囉!

I wonder if you had the same question as I did when you first got to know Git:

If Git was just a simple code version control tool, then how could I be

motivated to push the code to Git? How do you decide when to push the code to

Git?

After code scripts being pushed Git, DevOps engineers usually set up CICD pipelines

to automatically build and deploy programs. Code scripts used in these

automated pipelines all come from Git Repositories. For these ingenious code scripts

being executed in production environment, they must be pushed to Git.

In addition, these scripts must go through the aforementioned static analysis,

data checking, test cases and other checks before we could release the program

to production environment. The implementation of these control mechanisms must

rely on CICD pipelines!

6.

資料出貨管理機制

Data Delivery Management:

如果以資料供應商的角度思考,資料出貨給客戶時,可能好幾種交貨方式,不同的方式可能都有特殊的要求與限制,可能是以檔案交貨,可能是以API提供資料,可能是透過資料供應商自己的自有平台,也可能是客製化的資料產品,不同的出貨方式都會帶來衍伸的資料處理成本與管理維護成本。

此時可能需要透過ETL工具或Airflow這類的資料流程管理工具,協助串連資料,並於不同的出貨平台做資料同步的動作,這樣的工作任務需要資料工程師、DevOps工程師、PM共同協調合作,部分工作內容甚至有可能會移至CICD Pipeline處理,這方面沒有標準答案,端視資料出貨管理的情境而定。

If we think from the perspective of a data supplier, there may be several ways

to deliver data to customers. Different methods may have special requirements

and restrictions. It may be delivered in the form of files or provided via API.

It may be through the data supplier’s own platform, or it may be a customized

data product. Different delivery methods will bring about different processing

costs, management costs, and maintenance costs.

We could use ETL tools or data flow management tools such as Airflow to help

connect, synchronize data between different platforms. Such tasks require the

coordination of data engineers, DevOps engineers, and PMs. Some work tasks may

even be moved to CICD pipelines for processing. There's no standard answer about

how to do. It depends on different data delivery situations.

應用程式系統Application System

#infra #coding #Python #system_design #DevOps

開發「應用程式系統」所需要注意的面向更多,除了先前所提「資料處理」方面的6項要點,另外還有以下新的6項要點:

There're more aspects to pay attention to when developing application systems. In addition to the 6 key points of data processing mentioned before, there're another 6 key points:

7.

應用程式框架設計

Application Framework Design:

應用程式與資料處理程式相比,複雜性高很多,如何做到前端、後端分離,以及日後改版、更新、擴充的可維護性,是全端工程師或系統架構師必須思考、規劃的重要議題。

例如MVC (Model-view-controller)是常見的框架設計方式,將資料庫ORM的操作以Model相關的目錄統一管理,將前端視圖與路由以View相關的目錄統一管理,最後將程式業務邏輯、連線等功能以Controller相關的目錄統一管理,以確保龐大的應用程式結構,可以容易閱讀與維護。

Compared with data processing programs, application programs are much more

complex. How to separate the front-end and back-end, as well as the

maintainability of future revisions, updates, and expansions, are important

issues that full-end engineers or system architects must think about and plan

for.

For example, MVC (Model-view-controller) is a common framework. Model-related

directories would manage database ORM operations; View-related directories would

manage front-end views and routes; Controller-related directories would manage

business logic, connection, functions, etc. All these are deigned to ensure

that a large application structure could be easily read and maintained.

8.

CICD設計-資訊安全

CICD Design - Information Security:

應用程式系統於上線前,通常都會因為法遵或ISO的需求,執行弱點掃描,例如以Python開發的應用程式系統,或許可以考慮使用Bandit之類的靜態掃描工具,然而實際上應該選用何種工具,主要仍以合規為主。

此外,每次更新應用程式的程式碼,發布新版的應用程式之前,是否都需要產製這些報告,都值得思考是否要納入CICD Pipeline自動執行。

Before an application system goes online, it is usually scanned for

vulnerabilities due to compliance or ISO requirements. For example, for an

application system developed in Python, you might consider using a static

scanning tool such as Bandit. However, about which tool should you choose, compliance

is still the main consideration.

In addition, whether these reports need to be generated whenever application programs

are updated and released, it's worth considering adding the step in CICD pipeline

for automation.

9.

CICD設計-Build Image

CICD Design - Build Image:

傳統做法要將應用程式部署在主機上,必須手動安裝網頁伺服器,調整網路、資安、憑證等設定事項,最後再啟動應用程式,這樣的動作其實是制式的、固定的,因此現在的趨勢是將這些設定動作,以撰寫Dockerfile等方式Build為Image,再存放至Registry,準備後續部署的動作。

Traditionally, to deploy an application on a host, you must manually install a

web server, and adjust network, security, certificate, etc., and then start the

application. This is a standardized and fixed process. The trend is to build image

by writing Dockerfile to fulfill the process. The built image would be stored

in a registry to be used for subsequent deployment process.

10.

CICD設計-部署

CICD Design - Deployment:

既然要Build Image,當然就要以Container的方式做部署,最常見的就是透過Argo CD自動部署到Kubernetes (K8s)叢集,這樣的自動化操作需要對K8s本身的運作有深厚的理解,相關部署應用程式的Yaml檔,甚至是K8s本身如果要以Terraform實現IaC (Infrastructure as Code),這些高難度的自動化部署動作才能夠被DevOps工程師穩健地實踐。

應用程式部署後,仍然有相當多的監控任務需要留意,例如以Prometheus和Grafana監控K8s Pod的運作情形,又例如以Elasticsearch、Fluentd/Fluent Bit、Kibana (EFK)管理日誌等,這些任務都是在應用程式部署之後,要特別留意的。

Since we need to build an image, we must deploy it as a container. The most

common method is to automatically deploy it to a Kubernetes (K8s) cluster

through Argo CD. Such automated operations require deep understanding of K8s

and related deployment applications, such as Yaml files of the program, or even

K8s itself as Terraform IaC (Infrastructure as Code). They're highly difficult

automated deployment actions should be steadily implemented by DevOps

engineers.

After the application is deployed, there're still many monitoring tasks that should

be taken care of, such as monitoring K8s Pod with Prometheus and Grafana, and

managing logs with Elasticsearch, Fluentd/Fluent Bit, Kibana (EFK).

11.

整合測試與壓力測試

Integration Testing and Stress Testing:

應用程式部署完畢之後,品管工程師依產品或專案的合規需求進行測試,最常見的是整合測試與壓力測試;整合測試目的為試驗所有的服務功能如API、資料庫等整合在一起操作時,是否如預期執行,可以使用Selenium之類的自動化工具進行試驗;至於壓力測試則是確認應用程式乘載大量使用者同時操作時,其回應時間是否在可以接受的範圍,可以使用Pytest或Jmeter之類的工具進行試驗。

After the application is deployed, quality assurance engineers would conduct

tests based on the compliance requirements of the product or project. The most

common tests are integration testing and stress testing. The purpose of

integration testing is to test all services, such as APIs and databases, when

they are integrated. To test whether the application is executed as expected,

you could use automation tools, such as Selenium. As for stress testing, it's

to confirm whether the response time of the application is acceptable, when a

large number of users requesting simultaneously. You may use tools like Pytest

or Jmeter to do it.

12.

CDN (Certificate、WAF、Cache)、24x7、LDAP、Queue等架構中各式服務等雜項問題

Miscellaneous Issues Related to CDN (Certificate, WAF, Cache), 24x7, LDAP,

Queue, and Other Services in the Architecture:

應用程式正式上線使用之前,仍有許多雜項問題待討論、待處理。如果應用程式服務的市場為廣大的全世界,那麼就要考慮加上CDN,以快取改善使用者體驗,連帶憑證與網頁應用程式防火牆等基礎設施,可能都必須於此管理與強化,也必須針對全世界的使用者操作情形,規劃全天候(24x7)的監控服務。

如果只是組織內部使用的小型應用程式系統服務,則可能因為使用者需求的改變,而要求客製化的服務。例如與組織既有的帳號進行整合,改為使用LDAP登入或做SSO登入;又例如使用者頻繁地爭搶資料讀取、計算與寫入的程序,可能得考慮將既有的應用程式解耦,加上訊息佇列的服務,讓各個處理程序單純化,利於日後擴展。

諸如此類的問題實在太多了,系統架構師必須解析與評斷應該如何處理這些系統施作上的問題,於此僅羅列幾個常見的問題於此供參。

There're still many miscellaneous issues to be discussed and resolved before

the application is officially launched. If the market of the application is the

whole world, then you need to consider adding CDN to cache and improve user

experience. Infrastructures such as certificates and web application firewall

may be managed here. In addition, 24x7 monitoring service should also be

implemented for users around the world.

If it's a small application only used within an organization, customized requests

may be issued. For example, you could be asked to upgrade login mechanism with

the organization's existing LDAP or SSO. For another example, if users

frequently request resources for data reading, calculation, and writing, you

may need to consider decoupling existing programs. Adding message queue services

might be a possible solution to simplify each processing procedure and

facilitate future expansion.

There're so many system implementation problems that architects must analyze

and judge how to deal with. Here I only list a few for your reference.

沒有留言:

張貼留言